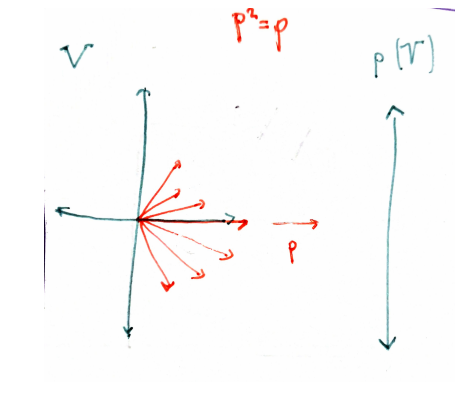

Projections

Given a vector space

such that

Roughly speaking, for a projection you not only need to specify the "target" subspace, but also a "direction" along which to project (the red vectors in the picture above). So, in some sense, a projection gives us a decomposition of the vector space

In finite dimensional vector spaces

is the identity operator. . Every can be decomposed

Other properties of projections, valid even for infinite dimension, are:

- The only eigenvalues allowed for a projection are

and 1, even in complex vector spaces. - The product of two projection is not, in general, another projection. But it is true when they commute.

- Even more generally, projections are maps that have a right inverse:

When we work in a Hilbert space

that is, if it is a self adjoint operator.

They satisfy the following properties:

- Being orthogonal is equivalent to the fact that the image

and the kernel are orthogonal subspaces: let and then and so

Reciprocally, any projection

and

and therefore

- Given any closed subspace

of it there exist an orthogonal projection such that . The proof (wikipedia) goes through taking the infimum of the distance... - If

is an orthogonal projection, is an orthogonal projection and

Proof:

First,

And secondly,

- Orthogonal projections are bounded operators. From Cauchy-Swarth

and so

This is similar, in some sense, to saying that is continue.

Matrix Representation of Orthogonal Projections

Let’s start with the simplest case, where the subspace

Then the orthogonal projection of any vector

This can be written compactly as:

or, in Dirac notation:

Here:

is the column vector representing , is the row vector (or the conjugate transpose of ), - The operator

maps any to .

So this formula represents the projection operator onto the subspace spanned by.

This correspond to the matrix multiplication

Now, suppose we have a k-dimensional subspace

Let’s form a matrix

Note: Each

Then the orthogonal projection operator

The intuition is:

projects a vector into the coordinates in the subspace using the orthonormal basis . then maps those coordinates back into the original space as a linear combination of the basis vectors.