Backpropagation

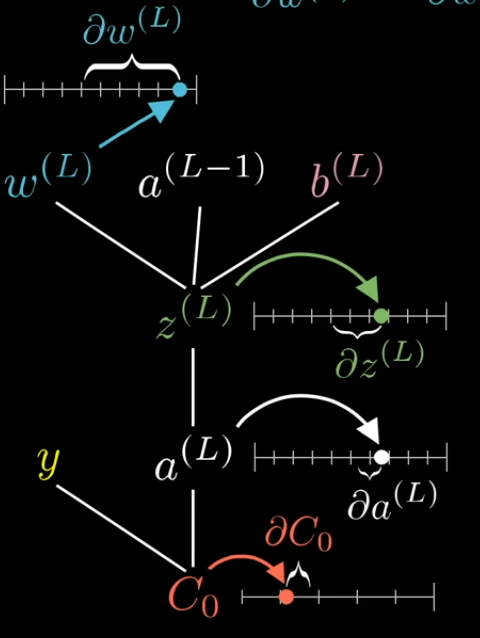

In the context of neural networks, backpropagation is a method for computing the gradients of the loss function with respect to the weights of the neural network, and gradient descent (or its variants) then uses these gradients to update the weights by taking a small step in the direction of the negative gradient, with the goal to arrive at a minimum.

One neuron per layer

From this video

Two neurons per layer

To compute

we need to follow the chain rule for derivatives through the layers of the network.

Note thatonly affects , so it only influences and thus only the first term of the cost.