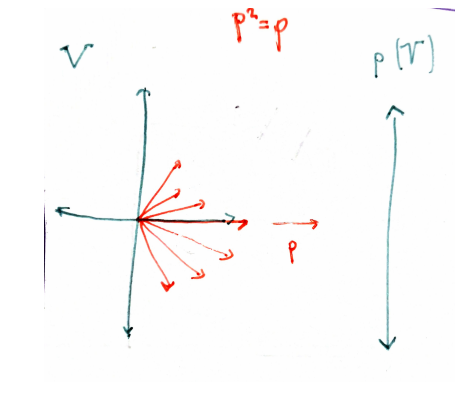

Projections

Given a vector space

such that

Informally speaking, for a projection you not only need to specify the "target" subspace, but also a "direction" along which to project (the red vectors in the picture above). So, in some sense, a projection gives us a decomposition of the vector space

In finite dimensional vector spaces

is the identity operator. . Every can be decomposed

Other properties, valid even for infinite dimension:

- The only eigenvalues allowed for a projection are

and 1, even in complex vector spaces. - The product of two projection is not, in general, another projection. But it is true when they commute.

- Even more generally, projections are maps that have a right inverse:

When we work in a Hilbert space

that is, is a self adjoint operator.

They satisfy the following properties:

- Being orthogonal is equivalent to the fact that the image

and the kernel are orthogonal subspaces: let and then and so

Reciprocally, any projection

and

and therefore

- Given any closed subspace

of it there exist an orthogonal projection such that . The proof (wikipedia) goes through taking the infimum of the distance... - If

is an orthogonal projection, is an orthogonal projection and

Proof:

First,

And secondly,

- Orthogonal projections are bounded operators. From Cauchy-Swarth

and so

This is similar, in some sense, to saying that is continue.

- Suppose

has dimension 1, and we take a length 1 vector . If we fix an orthogonal basis we can express

or

in Dirac bracket notation. I guess that is like taking an element of the tensor product

This formula can be generalized for a projection on a subspace